The way we listen to audio is changing – it’s becoming bigger, bolder and more immersive than ever before.

While the Dolby Atmos logo can now be found practically everywhere (on your TV, in your local cinema, even on your phone), have you ever wondered what makes it special? How is it different from surround sound? And how does it work in comparison to the familiar stereo format?

In this blog post we’ll answer all of those questions and more, exploring the history of audio playback and all of the exciting things Dolby Atmos is bringing to the table.

All About Stereo

Taking a quick look into the history of sound reproduction, we can see four main steps leading up to the creation of Dolby Atmos.

We began in ‘mono’ – a single channel recorded with a single microphone.

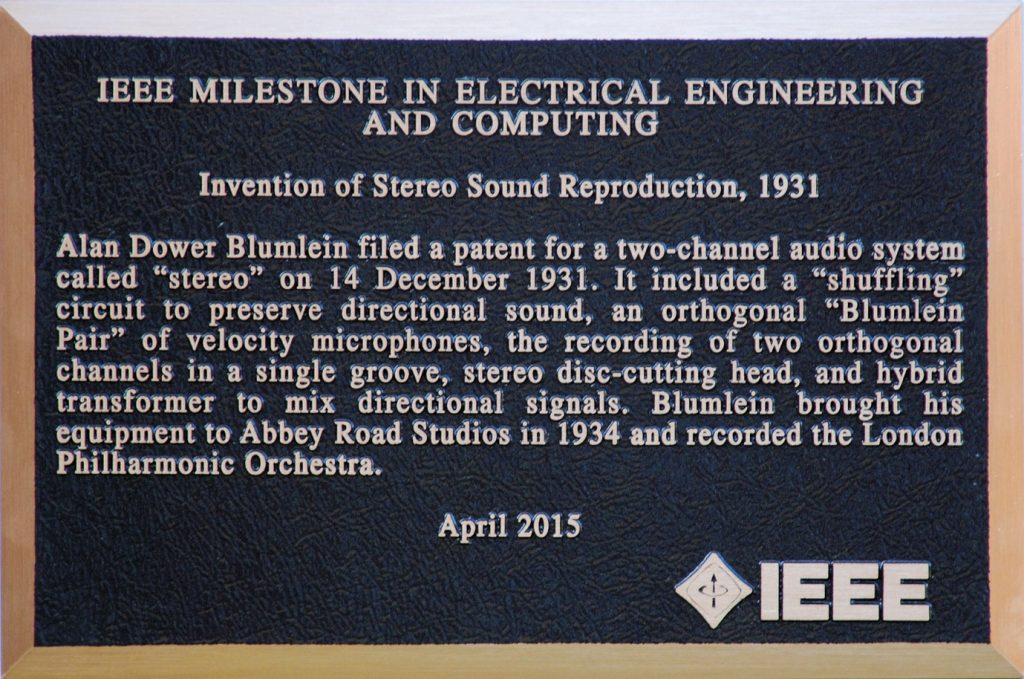

Around the 1930s, stereo audio began to appear. This type of audio can be recorded with two microphones positioned around the sound source (a guitar or piano are common examples) with the signals from each microphone assigned to either the left or right channel. The sound reaches each microphone with slight differences in timing and frequency creating the illusion of width and space when we listen back on stereo speakers.

A stereo listening setup involves two speakers. When a stereo track is played, an imaginary 1-dimensional ‘sound field’ is created between the speakers. To hear the most convincing ‘sound field’, you’ll either need to use headphones or stay equally distant from the left and right speakers.

We can move the position of a sound in between the left and right channels by decreasing either side’s signal level – this is called ‘panning’. A louder signal on the left side will move the sound towards the left and vice versa. We can also use mixing tools like EQ, dynamic control and reverb to give the illusion that sounds are closer or further away. Still, they remain trapped in the 1-dimensional sound field between the speakers.

Adding Other Dimensions

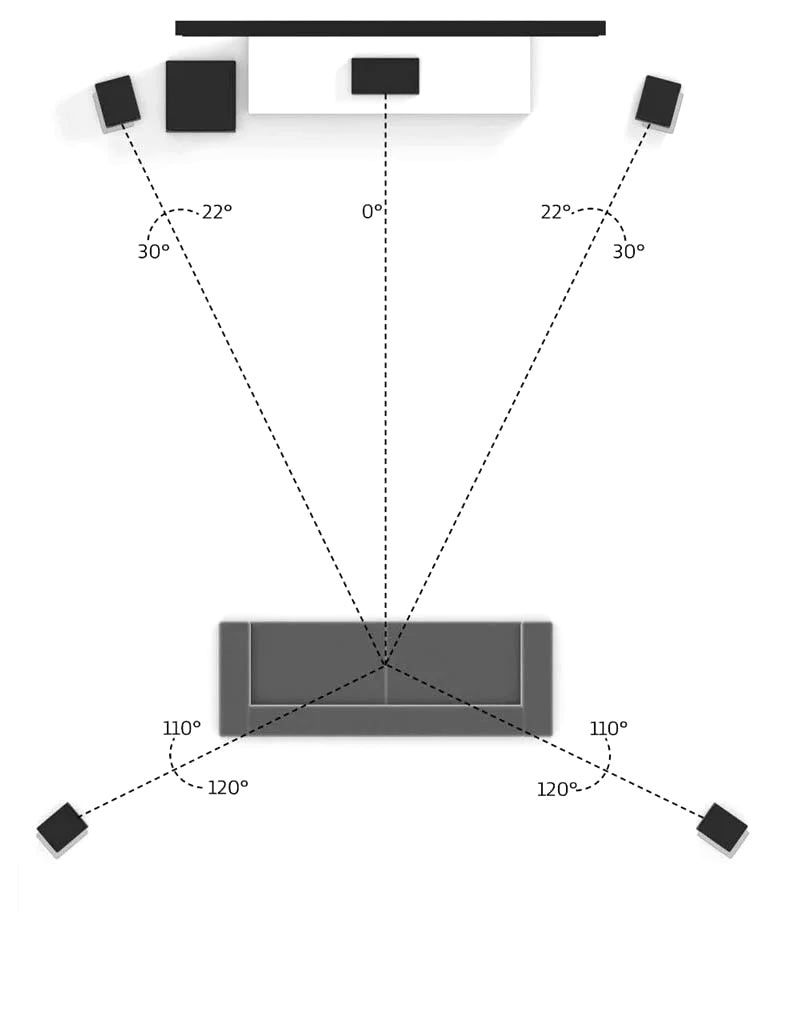

The next step after stereo was to add another dimension to our listening setup. A conventional surround sound format is described as either 5.1 or 7.1, meaning 5 or 7 speakers surrounding you at ear level (plus an added subwoofer, or the .1). This creates a 2-dimensional sound field where we can move sounds front-to-back as well as left-to-right.

5.1 is the most common surround sound speaker layout and is usually what you’ll find in a home cinema. It consists of centre, left and right speakers in front of the listener, plus surround left and right speakers slightly behind the listener. With this layout, we can pan sounds not just between a left and right speaker, but between any combination of the 5.

A 7.1 system uses 4 surround speakers, allowing us to split up the rear and side sound effects. In this layout, the side speakers are positioned at about 90 degrees to the listener, while the rear speakers sit behind.

These two layouts can be scaled up for commercial use. In a commercial surround sound cinema, for example, there will be multiple speakers in each position to account for the larger audience.

Expanding on this surround setup even more we can add either 2 or 4 height channels (written as .2 or .4) above the listener to reach the final step in our journey: a 3-dimensional sound field. With setups like these (such as 7.1.2), you become immersed in audio travelling front-to-back, left-to-right and up-and-down. Combining these makes for endless directional possibilities and adds a whole new creative dimension to the art of audio mixing.

All of these surround sound systems share one similar goal: to reproduce audio in a way that replicates how we hear in real life. It’s almost as if the sound is turned into a physical object within the space…

Channel-Based vs. Object-Based

Conventional stereo or surround formats are channel-based, meaning individual tracks in a mix are routed to a single stereo or surround output channel. A pan control on each track determines which speaker(s) the signal is sent to, whether it be left, right, back left, etc. In this format, the mix is committed to a specific number of channels, meaning that in order to listen to the mix, you need a playback device which is optimised for that type of mix and has the right number of speakers.

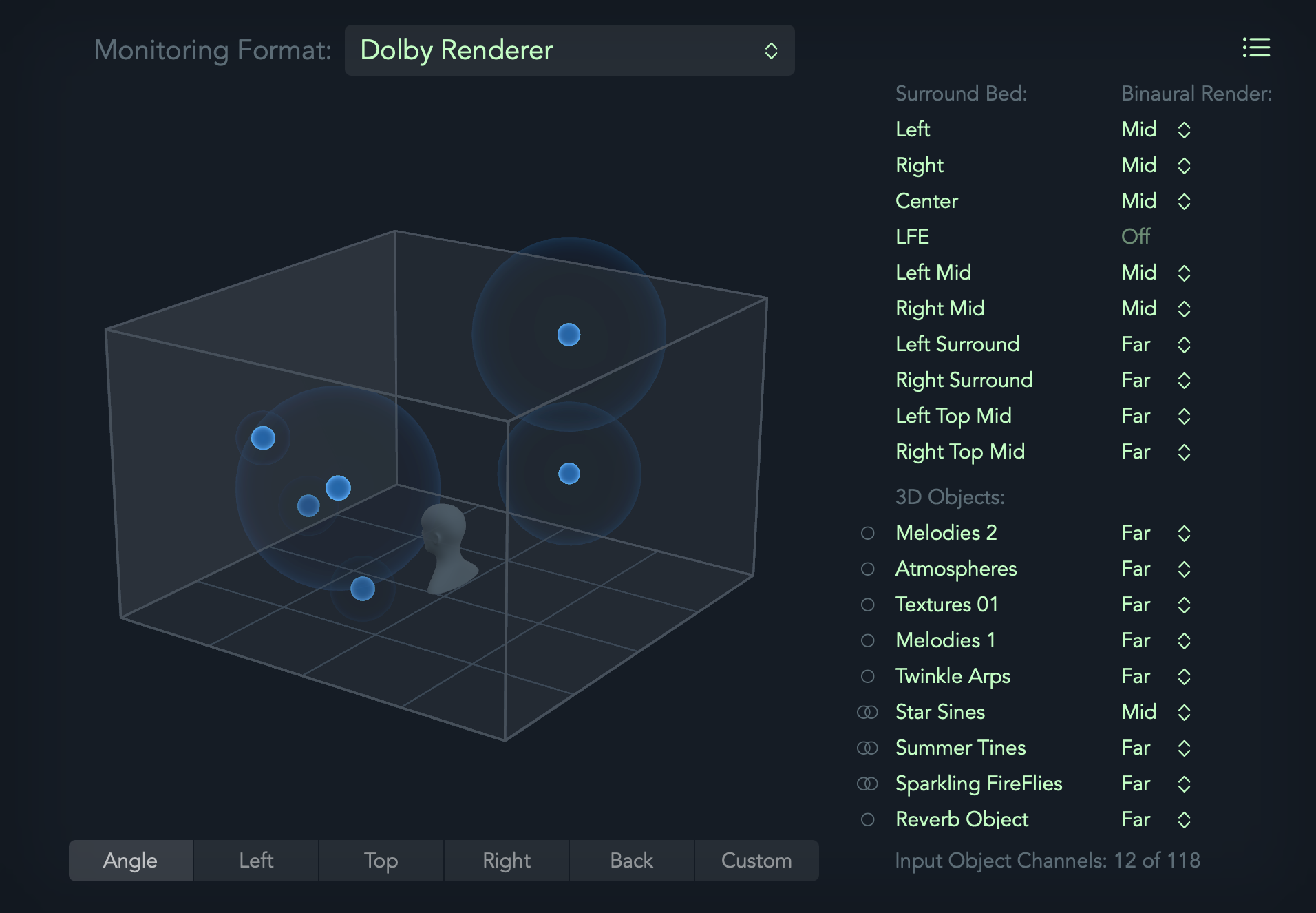

An object-based system like Dolby Atmos removes this restriction. Instead of panning a sound between a fixed number of channels, Dolby Atmos can store the position as metadata similar to X,Y and Z coordinates in the 3 dimensional sound field. When mixing, this metadata along with the audio for that track are sent separately to the Dolby Atmos rendering software. They are then re-combined to make an ‘object’.

However, the renderer software is not entirely object-based. You can also use it like a conventional channel-based system. This means that you can route some of your tracks to a surround output bus (like 7.1.2) and the surround panning position is baked into the signal rather than stored separately as metadata. These specific channels are referred to as the ‘bed’ in the Dolby Atmos Renderer.

Which should we use, object or bed? It’s easier to use a ‘bed’ for signals that won’t move around the 3D space, or those recorded in stereo or surround (with 2 or more microphones). Only tracks that are routed as a bed can be sent to the LFE channel, so that means any bass-heavy sounds should use a bed.

Objects are better for providing a really precise spatial location, or for signals that are going to move around. Objects can only have one audio signal, so multi-signal recordings like stereo would need multiple objects.

The vital part of Dolby Atmos is its renderer. With the renderer, the finished Dolby Atmos mix can be played back on systems with any speaker layout: stereo, 5.1, 7.1.2 etc. The renderer turns the signals into a channel-based output which fits the speaker layout it’s about to be played on.

Of course, this means that the more speaker channels you have available, the more accurate and precise the 3D sound field will be.

What About Headphones?

Stereo has always been our preferred listening format for music. Whether this means a pair of speakers in your home, at a live music venue, or on-the-go with your phone and a pair of headphones. But how can we make immersive audio with just a standard pair of headphones?

You may be familiar with binaural audio. This involves a recording technique where microphones are placed in a mannequin head to record a sound as if they were human ears. When we listen back on headphones, it’s as if we are inside a 3D sound field reconstruction of the recording location.

Our ears can detect the position of a sound by comparing volume, frequency content and timing differences between the sound in each ear. These differences are created by the physical distance between your ears and the shape of your head or ‘head shadow’. You can artificially recreate this by applying the same principals to an audio signal, a technique called binaural rendering.

Binaural rendering uses HRTF (Head Related Transfer Function) algorithms. It creates a virtual human head based on the average head shape and uses this to process the signal. Unfortunately, this means that the further away from the average shape you are, the less realistic the 3D binaural experience will be.

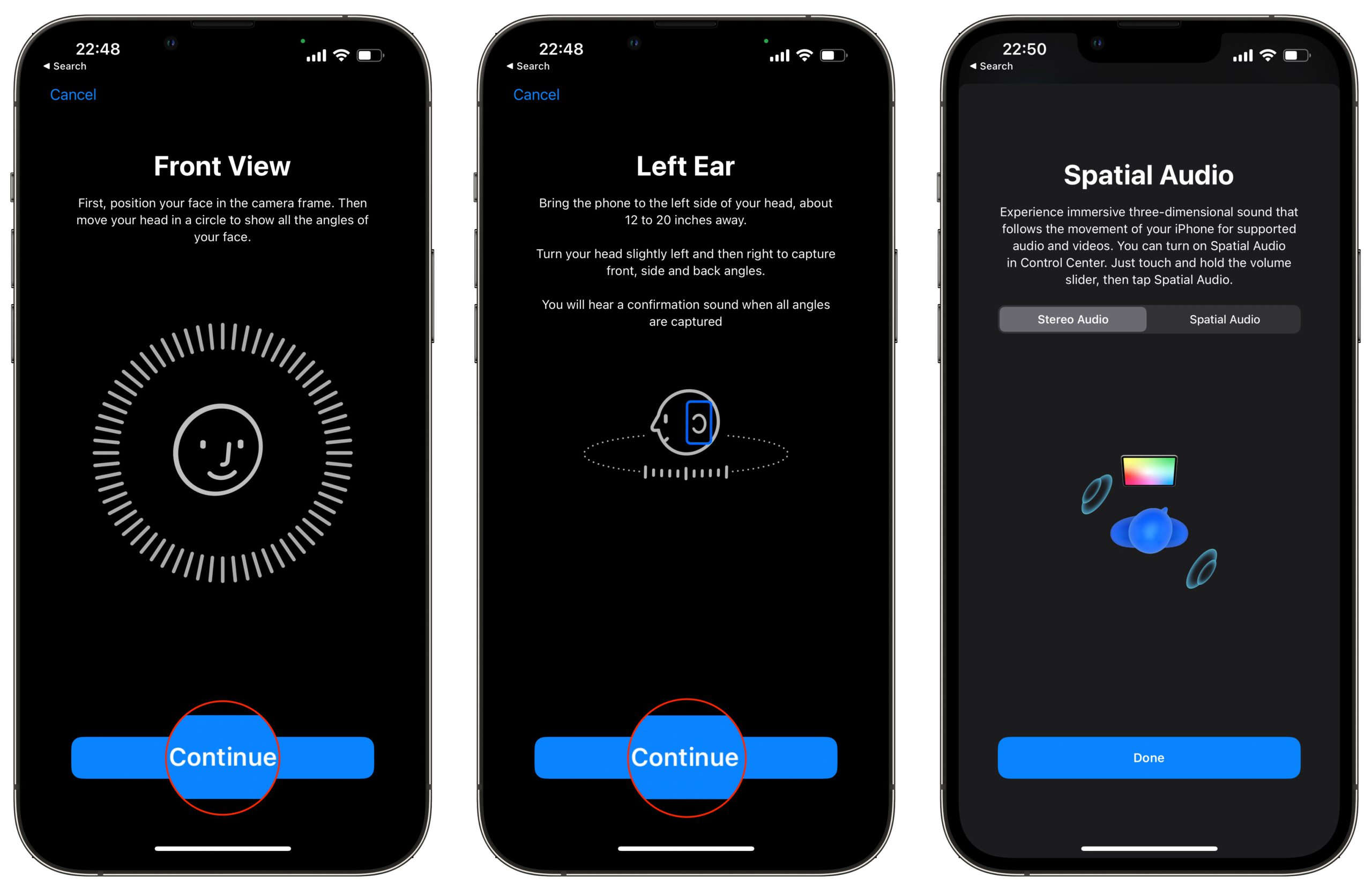

Measuring your personalised HRTF previously required measuring your head shape with complex technology in a sound-proofed room – not very easy to access. However, the release of iOS 16 this month has made ‘Personalised Spatial Audio’ available to iPhone users. To use it, you’ll need one of the more recent AirPods models plus an iPhone with iOS 16 and a ‘TrueDepth’ camera. The phone can then scan your face and ears in order to optimise the audio output for your unique facial profile.

As well as various speaker systems, Dolby Atmos mixes can also be rendered to binaural audio. This is the vital feature which unlocks the world of Dolby Atmos music for average listeners using conventional headphone or stereo setups. Apple Music’s Spatial Audio with support for Dolby Atmos uses a similar system. Apple Music can now play Dolby Atmos tracks on all AirPods or Apple headphones, plus their latest devices with the right built-in speakers.

Dynamic head tracking is another important element in Apple Music’s Spatial Audio. This involves monitoring the position of your head and adjusting the audio so it appears to stay in the same place as you move. This enhances music-listening by not only recreating a live music experience but also allowing for our natural head movements when listening to sound.

Is Dolby Atmos the future of music?

At first it seemed unlikely, but with all of these developments towards integrating Dolby Atmos into every listening device and setup we use, the world of immersive audio is effortlessly establishing itself in our everyday lives just as stereo once did.

Do you want to know how to get your tracks mixed and mastered in Dolby Atmos?